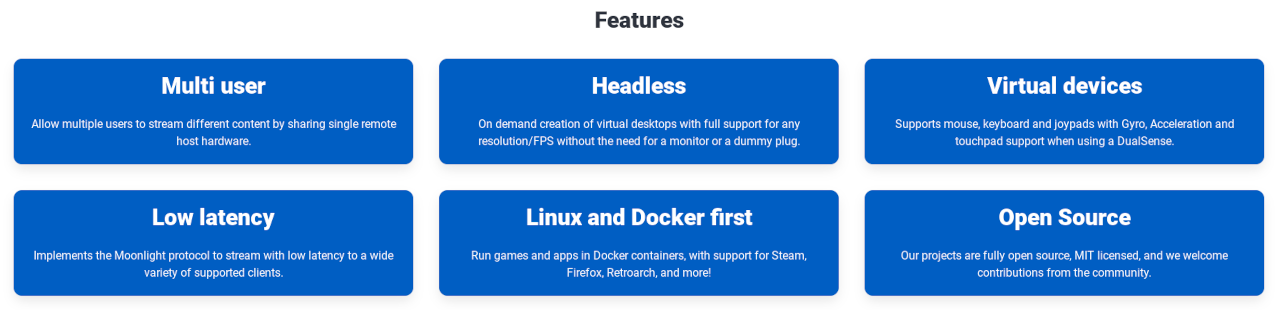

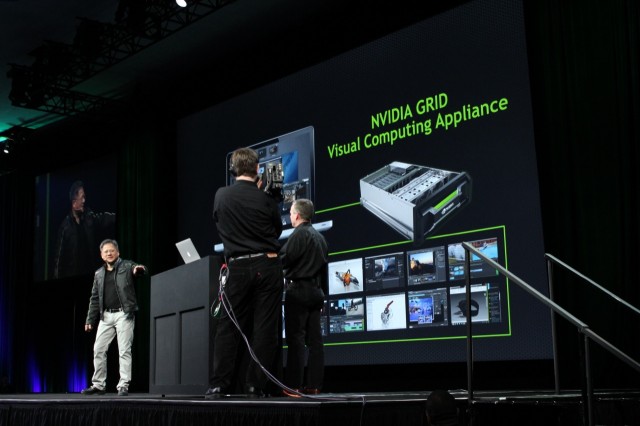

Nvidia CEO Jen-Hsun Huang directs a demo of the Grid Visual Computing Appliance (VCA) during his GTC 2013 keynote.

Andrew Cunningham

SAN JOSE, CA—One of the announcements embedded in Nvidia CEO Jen-Hsun Huang’s opening keynote for the company’s GPU Technology Conference Tuesday was a brand new server product, something that Nvidia is calling the Grid Visual Computing Appliance, or VCA.

The VCA is a buttoned-down, business-focused cousin to the Nvidia Grid cloud gaming server that the company unveiled at CES in January. It’s a 4U rack-mountable box that uses Intel Xeon CPUs and Nvidia’s Grid graphics cards (née VGX), and like the Grid gaming server, it takes the GPU in your computer and puts it into a server room. The VCA serves up 64-bit Windows VMs to users, but unlike most traditional VMs, you’ve theoretically got the same amount of graphical processing power at your disposal as you would in a high-end workstation.

However, while the two share a lot of underlying technology, both Grid servers have very different use cases and audiences. We met with Nvidia to learn more about just who this server is for and what it’s like to use and administer one.

What’s in it?

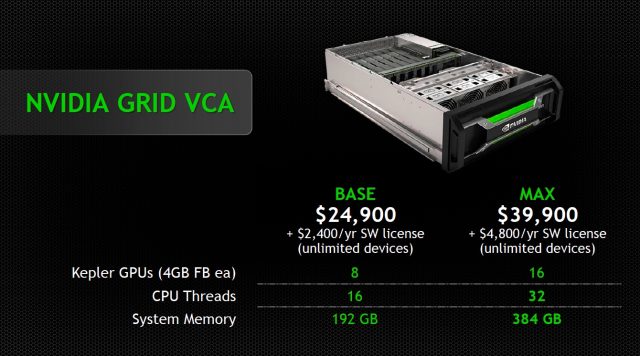

The VCA comes in two different versions: one with a single eight-core, 16-thread Intel Xeon E5-2670 CPU, 192GB of RAM, and eight GPUs across four cards that can support up to eight simultaneous users, and one that doubles all of those specifications and can support 16 simultaneous users. Both systems are the same physical size, however, and the low-end version can be upgraded to support more users if necessary.

The number of users the servers can handle at once comes down to the number of GPUs available—the VCA uses the company’s Grid K2 graphics cards, which essentially place two Quadro K5000-esque GPUs (each with 4GB of GDDR5 graphics memory) on the same card. As you may recall from our original write-up, each of these cards’ GPUs can only handle a single user at a time, which is good for people who need maximum performance but not optimal for those with more (but less-demanding) users.

Each user is also given access to 32GB of memory upon connecting to the server. The CPU is the only resource that concurrent users really have to share with each other, which could be a bottleneck depending on your users’ workload—there are simply fewer CPU resources available than there would be if each user had his or her own dual- or quad-core workstation. The entire server should consume just over 2000W of power under full load, though that number may change.

Who’s it for?

Andrew Cunningham

Nvidia is definitely emphasizing the “appliance” part of the VCA—it’s a standalone box that the company intends for use in small shops without dedicated IT departments. For companies that need to support more users or need something that integrates more fully into an enterprise IT environment, Nvidia will happily point you to one of its partners, companies like VMWare, Citrix, and Microsoft, which offer Grid-equipped products that work with traditional virtualization products (Nvidia is attempting to walk the fine line between offering its own server products and stepping on the toes of the hardware partners it relies on).

The VCA is meant to be pretty easy to set up and manage. It all starts with a “template” virtual machine, offered up every time a user connects to the VCA using the client software. By turning the server to management mode, a user can log in using the client software, install new or upgraded software and software patches, and then save a new template.

“It’s the same thing as maintaining one workstation,” Nvidia Product Manager Ankit Patel told Ars. “They’re literally maintaining 16 systems by maintaining one.”

Because of the way the server works—providing a fresh VM every time a user connects—and because the VCA doesn’t offer much in the way of local storage, you will need to save your files to some sort of network-attached storage instead. Patel told us that the server would be able to preserve specific settings for applications, though, for users with heavily customized workflows.

Since the VCA is offering up virtualized Windows, one potential use case is in shops that primarily rely on OS X or Linux for day-to-day tasks but need to switch into Windows to run specific Windows-only applications (clients are available for Windows, OS X, and Linux at this point). Most of the demos Nvidia showed both during Jen-Hsun Huang’s keynote and on the exhibition floor were on Macs. Obviously, this also allows systems that have no business running workstation applications—Ultrabooks, cheap desktops, and other such systems—to run these applications pretty well.

At least in Nvidia’s controlled testing environment, performance seemed very good—you’d be hard-pressed to tell the difference between the software running on the VCA server and software that was running locally. Patel told us that about 10Mbps of bandwidth would provide a good experience at 1920×1200 (and that this was the default upper-limit, though administrators can change this value), but that the system could dynamically ramp down the quality of the stream depending on a given user’s connection.

What does it cost?

Nvidia

Nvidia won’t be selling the Grid VCA directly to customers, but through its value-added resellers (VARs), who will also be tasked with providing hardware support. The eight-user Grid VCA’s hardware costs $24,900, plus a $2,400-per-year license to use Nvidia’s software; the 16-user model costs $39,900 up-front plus $4,800 per year for the software license.

Both of the software licenses cover unlimited client devices, useful in cases where employees have multiple work machines or want to use their home computers with the server. The cost of the Windows licenses is rolled into the cost of the server, but you’ll still need to buy whatever software packages you’d like to run in your VMs.

These prices sound a bit high, though they’re not absurd in the context of high-end workstations—bear in mind that we just reviewed a $6,300 workstation laptop back in January, and desktop workstations with the Quadro K5000 GPU (to which Nvidia compares the performance of the Grid K2 GPUs) easily run upwards of $3,000 apiece. However, the price of the VCA will be inflated if you also need to purchase client computers to connect to it with.

If your workload is more CPU heavy, if your users don’t mind working in Windows, or if you don’t need a GPU that’s as powerful as the K5000, you can easily equip eight users with new workstations for much less than $25,000, though that doesn’t factor in the cost of managing those systems. In short, the VCA’s value proposition does make sense, but only if you’re in its niche: a small organization with no IT outfit that still needs lots of graphics horsepower.

What comes next?

“I want to keep it simple, I want to get the product in the hands of customers,” said Patel, “and I want to get more feedback so I can quickly follow up and give the right features that they want.”

One focus will be working on the number of users supported by each server; as we mentioned when we originally covered the Grid graphics cards themselves, Nvidia will eventually enable multiple users per GPU for the K2 card so that more people can take advantage of its power at once. Patel also said that Nvidia would like to push in the opposite direction.

“It’s going to be interesting as far as what workload you put on there,” he said. “Because in terms of a system I have a CPU-to-GPU ratio—I’m focused more on GPU-intensive applications, so I’m actually more interested in going the other way. Not multiple users per GPU, but multiple GPUs per user.”

Right now, a single user can open multiple workspaces on the same client computer, but those workspaces can’t pool hardware resources or share data between them. Theoretically, Nvidia could make the entire server’s hardware available for one user with a software update.

Future software updates should also allow administrators to synchronize the template VMs across multiple VCA servers in the event that they have more than one; add support for more client operating systems, depending on their users’ demands; and offer the ability to serve up virtualized Linux in addition to Windows, though Patel told us there were “a lot of obstacles” to overcome before that would be possible.

Virtualizing Linux on the VCA could be important to supporting more than eight or 16 users per server, hardware limitations aside, since the VCA would need to come with a Windows license for each user that intended to use the server. “Those costs are going to get weird,” said Patel.

Listing image by Nvidia

https://arstechnica.com/information-technology/2013/03/nvidia-plans-to-turn-ultrabooks-into-workstations-with-grid-vca-server/