By Pankaj Singhal (Engineering Team, Hike)

In the ever-evolving realm of real-money gaming, our journey began with Rush at the twilight of 2020. Our initial challenge was monumental — to bring the app to life as quickly as possible. The first game on our roster was none other than the beloved Indian classic, Carrom.

After a careful evaluation of available solutions, we chose Smartfox Server. This decision was steered by a multitude of features it brought to the table:

- Robust Connection: Providing a persistent and reliable link with every client for a seamless gaming experience.

- Versatile Framework: Offering comprehensive Game, Room, and Zone management capabilities at the backend.

- Ease of Implementation: Feature-rich yet user-friendly, simplifying integration for Unity and backend developers.

- Widespread Recognition: Widely accepted among developers, streamlining our development process.

- Supportive Community: A thriving developer community ensuring ample support and resources.

Smartfox Server served us well as we launched more games within the next few months like Carrom, Callbreak, Quiz, and Speed Leedo. It handled the initial backend load flawlessly, becoming an integral part of our journey. However, as our user base swelled, so did our challenges eg. scale.

This blog unravels our evolution from Smartfox to In-house Gaming server, a game-changing shift that revolutionized our performance and scalability.

In March 2021, we released Speed Leedo and it quickly became a sensation. Over the next two years, our concurrent user count skyrocketed. Initially, Smartfox handled the load well, but as our user base grew, complaints flooded in. Gameplay lags and network disruptions became the norm.

Upon investigation, we uncovered Smartfox’s limitations. Our virtual machines (VM) were bottlenecked with CPU usage spiking to 70–80% and memory usage hitting 80%. Smartfox being a third-party server, we couldn’t dive into the framework to fix these issues easily.

To address the immediate concern, we opted for vertical scaling by gradually increasing the VM size from 4 cores CPU, 8 GB RAM -> 16 cores CPU, 128 GB RAM (4x CPU, 16x MEM). This provided us some breathing time, but it was evident that this wouldn’t be a sustainable fix as our user base continued to surge.

Further investigation revealed an ever-increasing trend of memory usage. The resource utilization initially wasn’t high but it showed an upward trend as the day passed and gameplays happened.

Despite thorough checks for memory leaks, finding a solution within the Smartfox framework proved elusive. But this information served as a good data point for our next step in tackling the scale challenge.

To solve this, we implemented regular nightly failovers, migrating traffic during lean hours. This coupled with vertical scaling, allowed us to survive for a good amount of time. However, we were well aware that a more permanent solution was required — a move towards distributed architecture.

We revamped our native and gaming architecture to distribute traffic across multiple servers. This involved careful consideration of factors like:

- Pool Amounts: Low-cost tables had a heavy user base (Fat Tables) which needed to be shared across multiple machines whereas High-cost tables didn’t have the significant user base, hence, multiple such tables were combined into a single machine.

- Game Context: We didn’t have any persistence layer for our games as the games were short-lived (less than 10–12 mins). Hence, we needed to distribute users of the same game instance to the same server.

After taking into consideration all the above factors we shipped the distributed architecture into production and gradually started introducing multiple machines into the system.

The introduction of a distributed architecture into production marked a significant improvement.

As Rush’s popularity continued to soar, a couple of VMs proved insufficient. Gradually, we scaled horizontally, reaching a configuration of 9 VMs, each with 16 cores and 128 GB RAM.

While this solution provided relief, it brought its own set of challenges :

- High Costs — Higher costs due to the increased number of VMs

- Licensing fees — 1 license per Smartfox Server

- Operational complexities — Our production deployment processes became a huge task with managing a high number of machines.

We were sailing on a high tide and the need for a more efficient and cost-effective solution became evident as our user base continued to grow.

Our choice of Netty for the in-house gaming server was influenced by our past success. Having built a large-scale messaging app on Netty, efficiently handling millions of connections and billions of messages on a few machines, we were confident in its capabilities. This experience made Netty the natural pick for our gaming infrastructure.

This decision wasn’t just about using technology, it came from what we learned before. With Netty, we made a special solution for gaming in just a few months. It wasn’t just about picking a tool, it was like finding a good partner for our gaming journey.

Choosing Netty helped us make a system that dealt with problems now and can grow in the future too. It wasn’t just solving today’s issues; it was preparing for what’s coming in gaming.

Our Netty-based Game Server architecture comprised two key components:

- Core Network Layer: Drawing inspiration from our legacy Hike Messenger Server architecture, we developed a low-resource-hungry persistent connection for 2-way communication with clients.

- Gaming Server Layer: Inspired by Smartfox Server, our pluggable game server included components like Zones, Rooms, User Manager, Zone Manager, and Room Manager.

This fusion allowed us to build a customized game server on Netty, empowering us with deep insights and fine-tuning capabilities.

Before releasing our Netty-based In-house gaming server into production — it underwent rigorous QA and load testing. The results were exceptional, showcasing the capability to support thousands of concurrent users with significantly reduced resource consumption compared to Smartfox Server.

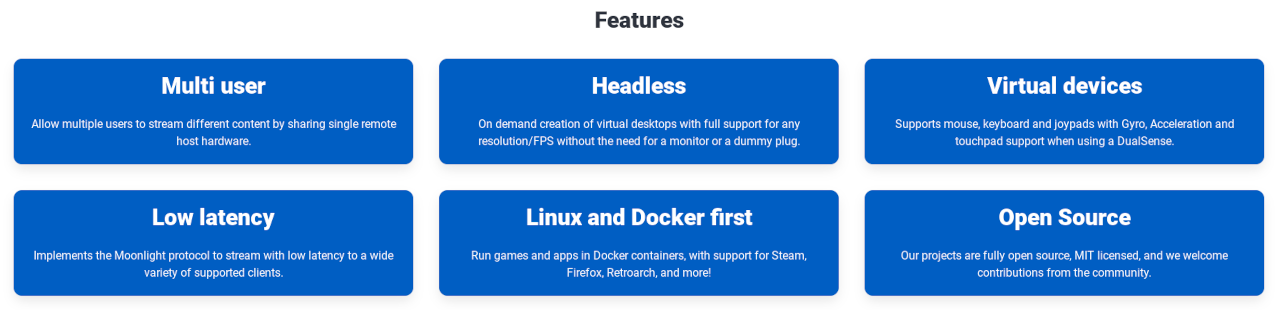

VM Resource Utilization:

After getting confidence from our load test numbers, we were able to migrate our entire production load from 9 large machines to 6 small machines, reducing the requirement

- cpu by 3x (9*16 cores ➝ 6*8 cores)

- memory by 24x (9*128 GB ➝ 6*8 GB)

This monumental improvement translated to saving approximately $6000 per month.

We incrementally released our in-house gaming server starting with Speed Leedo to most of our significant games.

Buoyed by the success of our in-house gaming server, our future roadmap envisions a transition from our current in-memory approach to a fully distributed game backend architecture, incorporating a persistent layer of cache/DB. This evolution will not only enhance fault tolerance but also fortify our infrastructure for future scalability challenges.

The transition from Smartfox to In-house server signifies a transformative chapter in our gaming journey. Beyond addressing immediate challenges, our Netty-powered solution positions us for sustained success in the ever-evolving landscape of real-money gaming. This journey underscores the importance of adopting scalable, long-term solutions to navigate the complexities of a rapidly growing gaming ecosystem.

https://blog.hike.in/elevating-gaming-backend-achieving-10x-scale-0e6ae43033bd